Building Better Prediction Engines

Sometimes, you just gotta build it yourself.

I was working for a B2B growth sales organization with the familiar problem of having too many leads to work and not enough time. The challenge was to think of ways that the sales organization might better target their limited time toward those prospects most likely to convert into business.

The conceptual model is simple, and has been used to predict discrete events for centuries. A subject (in this case the prospect) has a given set of characteristics X that yields a Y probability of the event occurring (in this case a sale). This probability can be expressed most easily as a binary ("I think this event will happen"), categorically ("I am very confident this event will happen"), or as a probability itself ("I am 95% confident this event will happen"). Even children will intuitively use this model in their head when they're assessing weather or not to ask their parents for a cookie before dinner.

The difficulty is eventually you go from your parent's kitchen to a multi-million dollar company with operationally complex partnerships. What may previously have been a handful of considerations is now hundreds if not thousands of variables in X. Thank god for statistics!

Iteration #1: Third-party software

Like any good developer given a new task, I first look to see if anyone else has done this in a way I can copy meaningfully use. Fortunately for me, the company was already using an enterprise platform with predictive functionality. All I had to do was tell it what column to predict and presto it would use all the other data in that table to make the predictions!

Okay. Is that...too easy?

It's not uncommon for applications such as these to hide the details of their methodology int he interest of preventing people from copying their IP. But the thing is if you're going to have a secret methodology...it has to work. in this case the predictions output by the standard model were accurate only 45% of the time. This means the sales team would have better luck randomly guessing what will convert than if they use this model. This performance can likely be attributed to the fact that models need to be trained to the specific data model, otherwise it will make assumptions about what is or is not important that may not be correct. So I decided to build a custom model, given my closer understanding of the data, to see if custom could outperform standard.

Iteration #2: Machine Learning

I decided to go with a Python model because all we're really doing is pulling, analyzing, and pushing data with an API. Python is a great language for data analysis, and especially great if you're just getting into programming. My plan was to build a classifier, which is a type of algorithm for grouping a population into sub-populations (in this case will/will not convert), and applying a continuous probability to its prediction using the following form.

After loading and cleaning the data, which any data engineer knows is going to be 75% of the job, I divided the dataset into 80% of historical records I will use to train my model, and 20% of historical records I will use to validate the model's success. The reason data shouldn't be used for both is it can lead to overfitting, meaning it would be very good at predicting against the sample but not data generally. For training my model I used a Gradient Boosting Decision Tree from the scikit-learn ensemble module for ML in Python. Gradient boosting is a method that combines weaker models together, and improves itself over stages.

The number of weak models used is controlled as a hyper-parameter, as are the size and depth of each model used. After fitting the model to our training data we're then able to apply that model to the validation data. I started with the model's defaults and started making minor adjustments to the hyper-parameters to optimize the model's performance. It became apparent to me that higher learning rates for each weak model had the biggest impact on performance. Through manual adjustment I was able to increase the model's performance from 80% to 88% accuracy. But this method was slow, and I knew a faster way.

Iteration #3: Machine Learning w/ Grid Search Optimization

Scikit-learn has another great library for model selection that compares different configurations across the hyper-parameter space and picks the one with the best performance. They automated tuning, what a concept! They tradeoff is you don't want to give it too broad of a hyper-parameter space to look through or it can take a LONG time to run. In the interest of saving time I had it search in iterations of 10% up to +/- 30% in either direction from the default hyper-parameters.

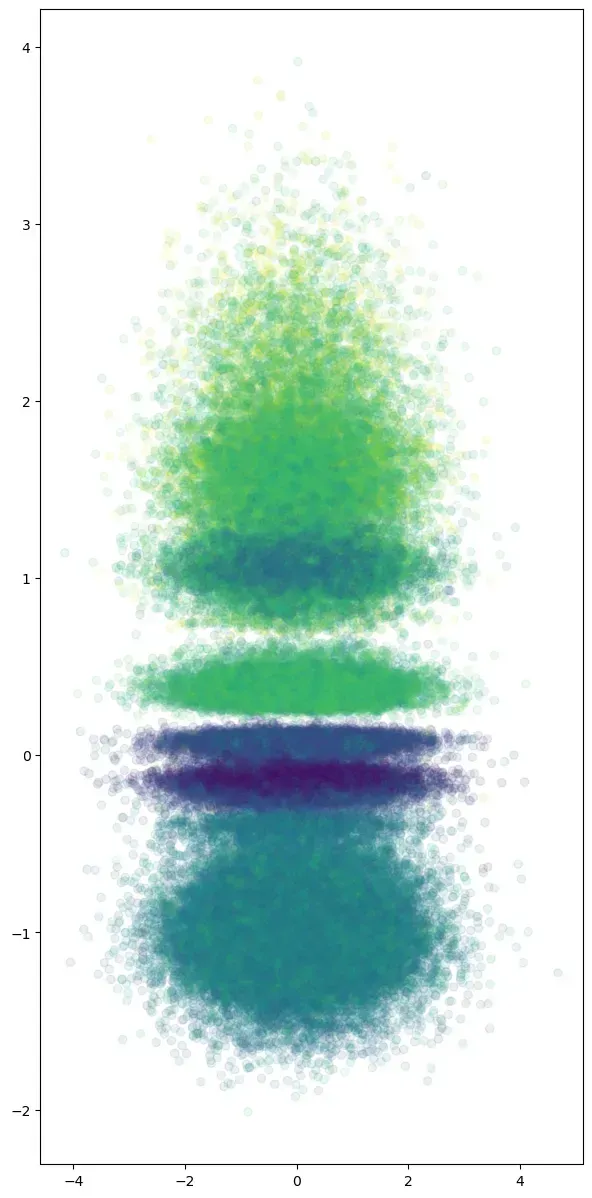

When I applied the grid search optimized hyper-parameter to the model, I got a staggering result of 92% accuracy! Again revisiting the original premise, this model is able to correctly predict if a prospect will convert into business 92% of the time, as compared to the 45% we started with using the third-party model. Still I wanted to see where the 8% was coming from so I could have an idea of how the model might be improved in the future. I saw that the 8% were mostly false negatives, which means the model is slightly pessimistic, and underestimating the conversion potential of some prospects. This can be visualized with a Receiver Operating Characteristic (ROC) Curve. ROC curves typically feature true positive rate (TPR) on the Y axis, and false positive rate (FPR) on the X axis. This means that the top left corner of the plot is the “ideal” point - a FPR of zero, and a TPR of one. This is not very realistic, but it does mean that a larger area under the curve (AUC) is usually better. The “steepness” of ROC curves is also important, since it is ideal to maximize the TPR while minimizing the FPR.

Conclusion

In conclusion, I was happy to be able to provide a model to help target the sales organization's limited time, and proud that my custom model was able to perform 2x as well as a standard third-party model. In the three months following the implementation of my classifier, there was a 15% increase in the organizations lead conversion metric, representing millions of dollars in potential new business. Still there are some areas for improvement. False Negatives potentially represent missed business if sales reps don't follow through, and so I fully intend to revisit this at a later date and see what we can do about that last 8%.